Loading...

AI-Ready Networking Stack.

Products which are Open for multiple vendors and AI ready. Driving TCO savings with long term ROI.

Network Operations Suite

Fabric Test Automation Suite for SONiCONES for NVIDIA AI FactoryONES for SONiCNetwork Observability Suite

Aviz Service NodesFlow VisionAviz Packet BrokerAviz Service Nodes for NVIDIA BlueField-3Make Networks for AI. Introduce AI in your Networks.

End-to-end solutions — any NOS, any switch, any ASIC, any LLM, any application — backed by partner best practices, proven tech, and SLAs.

Industry

Explore why Aviz is the best partner to modernize your network with.

Explore Case Studies, TCO and ROI Calculators, Certifications, Community and News room

Aviz Training and Certification

Learn and Certify in SONiC and AIPartner with Aviz Networks

Join our ecosystem of channel and technology partners. Together we deliver open networking solutions that drive innovation and growth.

Tailored for Your Role.

Explore solutions and tools built for operators, architects, CXOs, and ecosystem partners.

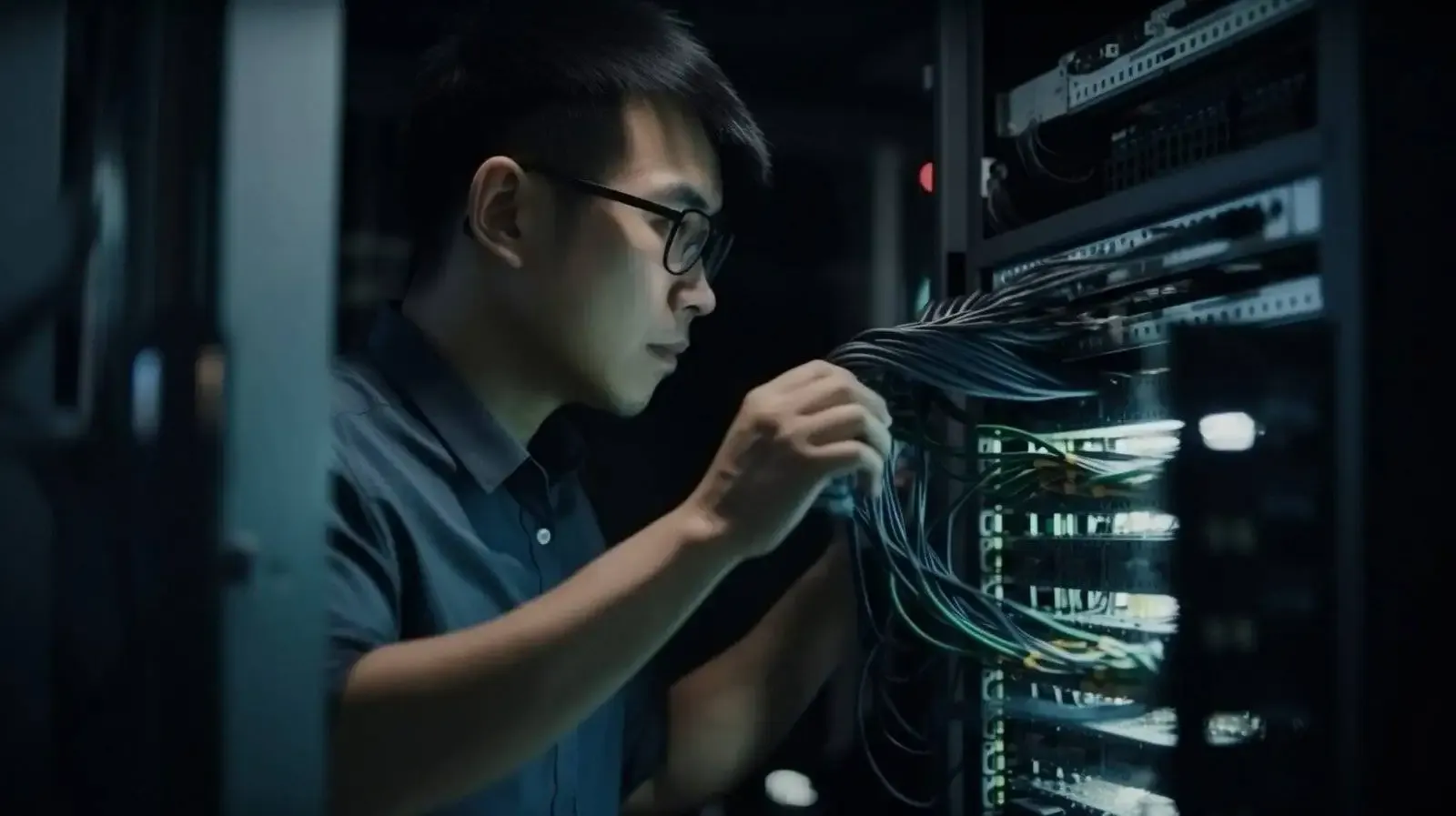

24/7 World-Class SONiC Support & Proven Services.

Our dedicated team delivers round-the-clock, world-class SONiC support with unmatched quality, scalability, and efficiency, keeping your network optimized, secure, and always running at its best.

Documentation

Aviz Service NodesFabric Test Automation SuiteNetwork Copilot™Aviz Packet BrokerOpen Networking Enterprise SuiteSupport

Submit Ticket