Learn how FTAS can do it for you!

Why Should Organizations Consider SONiC?

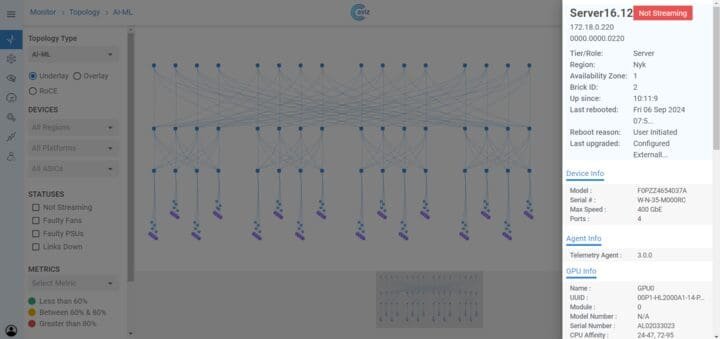

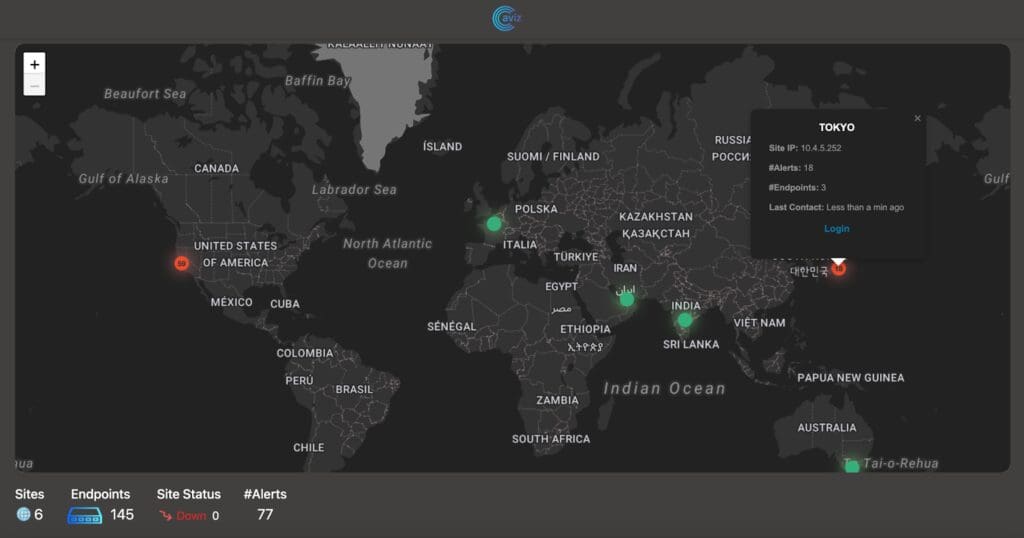

In today’s rapidly evolving networking landscape, organizations are seeking greater flexibility, scalability, and cost-effectiveness. SONiC (Software for Open Networking in the Cloud) has emerged as a leading open-source platform for building and managing data center networks.

SONiC empowers network operators to break free from vendor lock-in, reduce operational costs, and accelerate innovation. By providing a vendor-agnostic, open-source framework, SONiC offers unprecedented flexibility and control over network infrastructure.

What Makes Evaluating SONiC So Challenging?

While SONiC offers numerous benefits, evaluating and deploying it can be a daunting task due to several challenges :

- 1. Multiple Vendors: The diverse vendor landscape for SONiC hardware and software can complicate the evaluation process. Different vendors offer varying levels of support, features, and performance.

- 2. Multiple SONiC Flavors: The existence of community and vendor-specific SONiC distributions introduces additional complexity. While community SONiC offers flexibility, vendor-specific versions often provide additional features and support.

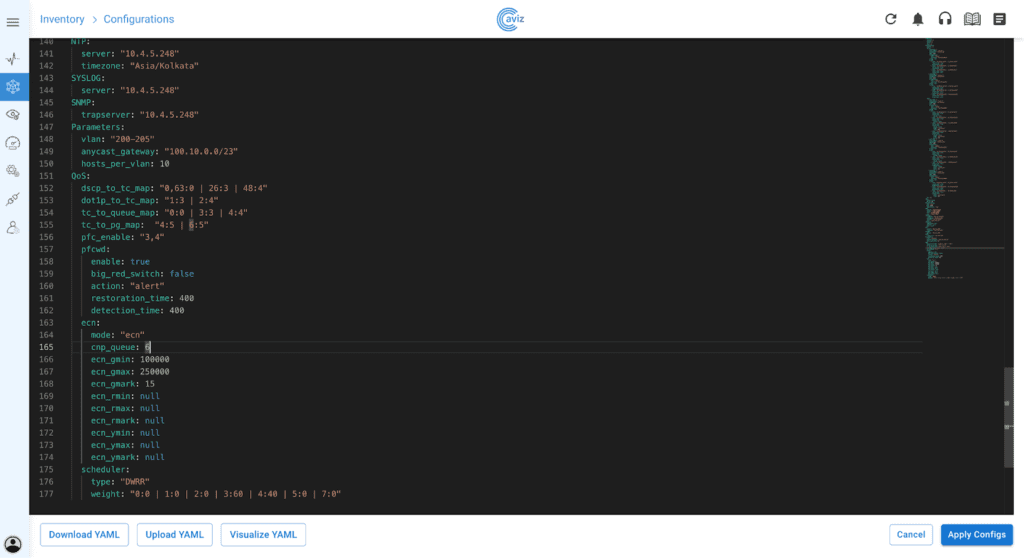

- 3. Complexity of Network Configurations: SONiC's flexibility and extensive feature set can lead to complex network configurations. This requires careful planning and testing to ensure optimal performance and reliability.

- 4. Need of SONiC Expertise: Effective SONiC evaluation and deployment demands specialized knowledge and skills. This can be a significant barrier for organizations without in-house SONiC expertise.

- 5. Volume of Features: The sheer volume of features and capabilities offered by SONiC can make it overwhelming to evaluate and configure. It requires a deep understanding of networking concepts and SONiC's specific implementation.

How to Accelerate SONiC Evaluations with FTAS

- 1. Accelerated Testing: FTAS automates a wide range of tests, including Layer 2 and Layer 3 functionality, high-availability features, and security protocols. This significantly speeds up the evaluation process.

- 2. Enhanced Reliability: FTAS rigorously tests SONiC configurations to ensure optimal performance and stability. By identifying and addressing potential issues early on, FTAS helps to minimize risks.

- 3. Faster Deployment: FTAS accelerates firmware and SONiC image qualification processes by automating testing and validation. This significantly reduces time-to-market for new features and fixes.

- 4. Continuous Validation: FTAS can be integrated into your CI/CD pipeline to provide continuous validation and ensure the highest quality standards. By automating testing and validation processes, FTAS accelerates development cycles and reduces the risk of introducing defects.

- 5. Day 2 Feature Testing: FTAS covers a wide range of day 2 features such as software upgrades, configuration changes, and troubleshooting, ensuring smooth operations and minimal downtime.

- 6. Resilience Testing: FTAS rigorously tests the system's stability in the face of reboots, container crashes, and link events at scale, ensuring business continuity and minimal service disruptions.

- 7. Scalability Testing: FTAS evaluates the scalability of SONiC deployments by testing the system's ability to handle increasing workloads and traffic volumes.

- 8. Stress Testing: FTAS subjects the SONiC network to extreme stress conditions to identify performance bottlenecks and potential failure points, ensuring the system's robustness and reliability.

How Does FTAS Keep Your Networks at Par with Quality Standards?

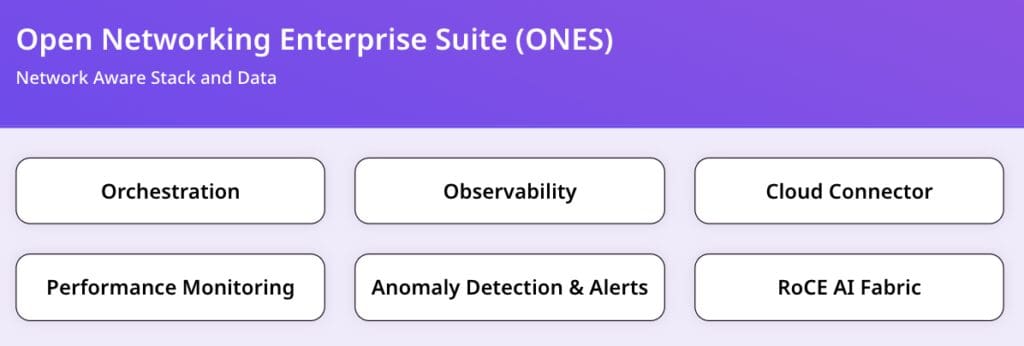

Aviz Networks’ Fabric Test Automation Suite (FTAS) is a powerful tool designed to ensure the quality and reliability of SONiC networks. By automating testing and validation processes, FTAS helps organizations accelerate deployment, reduce operational costs, and minimize risks.

FTAS helps maintain network quality by:

- 1. Standardized Testing: Ensuring consistent quality across different vendor hardware and software.

- 2. Automated Testing: Reducing manual effort and accelerating testing cycles.

- 3. CI/CD Integration: Integrating with CI/CD pipelines for continuous validation.

- 4. Performance, Reliability, and Scalability Focus: Ensuring networks meet demanding requirements.

- 5. Detailed Reporting and Analysis: Providing insights for optimization and troubleshooting.

- 6. Evolving with Industry Needs: Adapting to real-world challenges and customer feedback.

Supported Protocols

FTAS supports a wide range of protocols essential for modern data center networks:

- 1. Layer 2/Layer 3 Protocols:

- Ethernet

- IP (IPv4/IPv6)

- 2. Overlay Protocols:

- VXLAN

- EVPN

- 3. Control Plane Protocols:

- BGP

- STP

- LACP

- MLAG

- 4. Other Protocols:

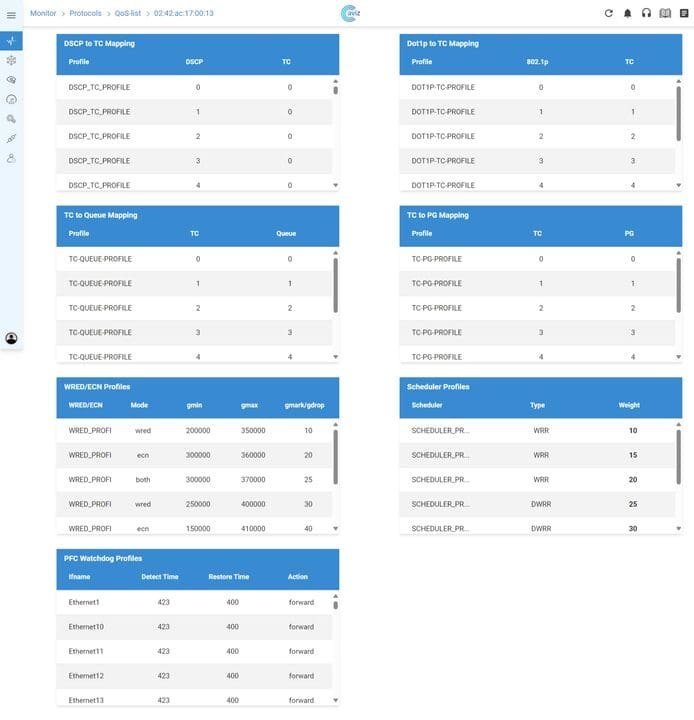

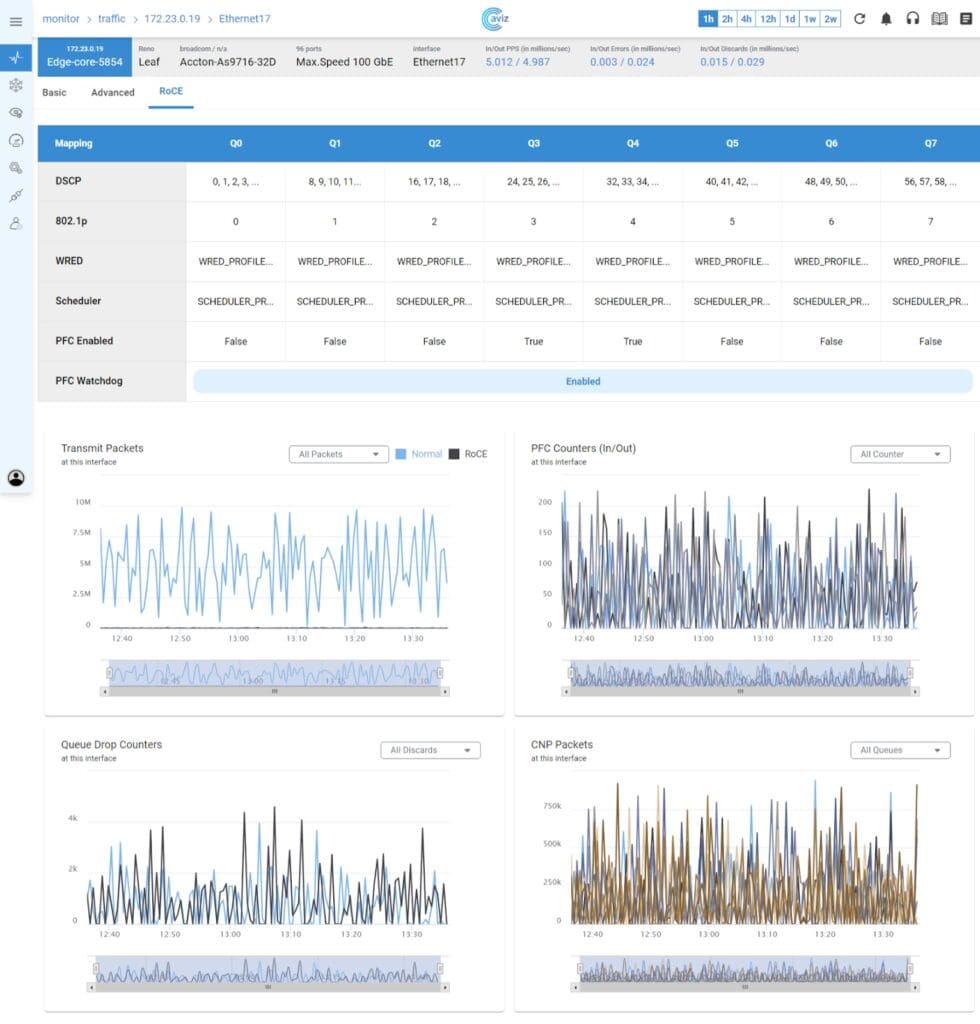

- QoS

What are the new features in FTAS 3.1?

- 1. QoS Testing: Comprehensive testing of Quality of Service (QoS) features to ensure optimal network performance.

- 2. Stress Testing: Rigorous stress testing to validate the stability and scalability of your SONiC network under heavy load.

- 3. EVPN/L2VXLAN Enhancements: Expanded coverage of EVPN and L2VXLAN features, ensuring seamless Layer 2 and Layer 3 network virtualization.

- 4. More SNMP Coverage: Enhanced SNMP monitoring and troubleshooting capabilities for better network visibility and control.

- 5. Fast Reboot Testing: Rigorous testing of fast reboot mechanisms to minimize downtime during software upgrades and reboots.

- 6. Platform CLI Coverage: Comprehensive testing of platform-specific CLI commands to ensure compatibility and functionality.

- 7. More Verifications in Resilience Testcase: Increased verification depth in resilience testing to identify and mitigate potential single points of failure.

How to Use FTAS

To use FTAS, please contact Schedule a Call with Our Team to Delve into FTAS. For comprehensive information before the scheduled call, visit our FTAS product page.