Explore the latest in AI network management with our ONES 3.0 series

Future of Intelligent Networking for AI Fabric Optimization

If you’re operating a high-performance data center or managing AI/ML workloads, ONES 3.0 offers advanced features that ensure your network remains optimized and congestion-free, with lossless data transmission as a core priority.

In today’s fast-paced, AI-driven world, network infrastructure must evolve to meet the growing demands of high-performance computing, real-time data processing, and seamless communication. As organizations build increasingly complex AI models, the need for low-latency, lossless data transmission, and sophisticated scheduling of network traffic has become crucial. ONES 3.0 is designed to address these requirements by offering cutting-edge tools for managing AI fabrics with precision and scalability.

Building on the solid foundation laid by ONES 2.0, where RoCE (RDMA over Converged Ethernet) support enabled lossless communication and enhanced proactive congestion management, ONES 3.0 takes these capabilities to the next level. We’ve further improved RoCE features with the introduction of PFC Watchdog (PFCWD) for enhanced fault tolerance, Scheduler for optimized traffic handling, and WRED for intelligent queue management, ensuring that AI workloads remain highly efficient and resilient, even in the most demanding environments.

Why RoCE is Critical for Building AI Models

As the next generation of AI models requires vast amounts of data to be transferred quickly and reliably across nodes, RoCE becomes an indispensable technology. By enabling remote direct memory access (RDMA) over Ethernet, RoCE facilitates low-latency, high-throughput, and lossless data transmission—all critical elements in building and training modern AI models.

In AI workloads, scheduling data packets effectively ensures that model training is not delayed due to network congestion or packet loss. RoCE’s ability to prioritize traffic and ensure lossless data movement allows AI models to operate at optimal speeds, making it a perfect fit for today’s AI infrastructures. Whether it’s transferring large datasets between GPU clusters or ensuring smooth communication between nodes in a distributed AI system, RoCE ensures that critical data flows seamlessly without compromising performance.

Enhancing RoCE Capabilities from ONES 2.0 to ONES 3.0

In ONES 3.0, we’ve taken RoCE management even further, enhancing the ability to monitor and optimize Priority Flow Control (PFC) and ensuring lossless RDMA traffic under heavy network loads. The new PFC Watchdog (PFCWD) ensures that any misconfiguration or failure in flow control is detected and addressed in real-time, preventing traffic stalls or congestion collapse in AI-driven environments.

Additionally, ONES 3.0’s Scheduler allows for more sophisticated data packet scheduling, ensuring that AI tasks are executed with precision and efficiency. Combined with WRED (Weighted Random Early Detection), which intelligently manages queue drops to prevent buffer overflow in congested networks, ONES 3.0 provides a holistic solution for RoCE-enabled AI fabrics.

The Importance of QoS and RoCE in AI Networks

Quality of Service (QoS) and RoCE are pivotal in ensuring that AI networks can handle the rigorous demands of real-time processing and massive data exchanges without performance degradation. In environments where AI workloads must process large amounts of data between nodes, QoS ensures that critical tasks receive the required bandwidth, while RoCE ensures that this data is transmitted with minimal latency and no packet loss.

With AI workloads demanding real-time responsiveness, any network inefficiency or congestion can slow down AI model training, leading to delays and sub-optimal performance. The advanced QoS mechanisms in ONES 3.0, combined with enhanced RoCE features, provide the necessary tools to prioritize traffic, monitor congestion, and optimize the network for the low-latency, high-reliability communication that AI models depend on.

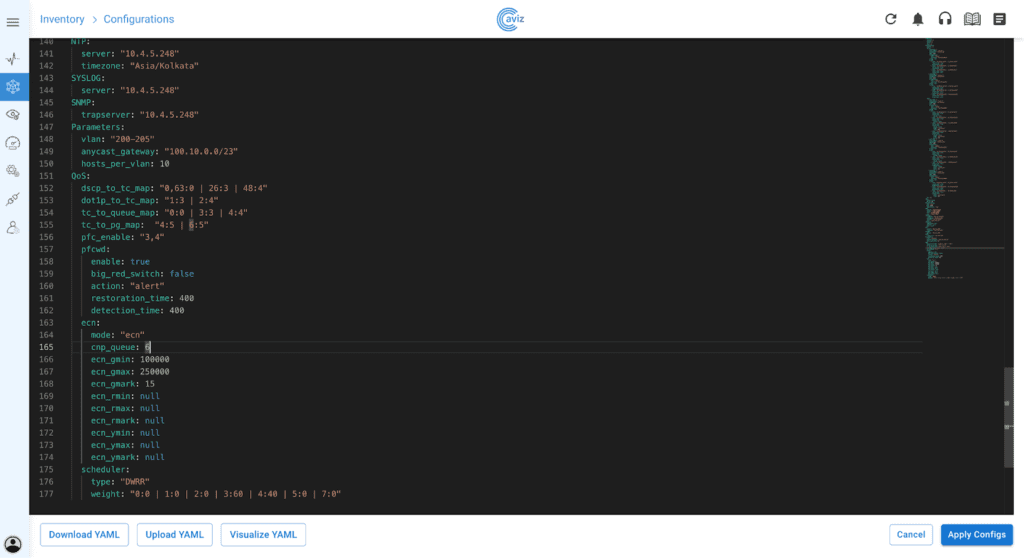

In ONES 3.0, QoS features such as DSCP mapping, WRED, and scheduling profiles allow customers to:

- 1. Prioritize AI-related traffic over other types of traffic, ensuring faster model training and lower latency.

- 2. Avoid congestion and packet loss, especially during periods of high traffic.

By leveraging QoS in combination with RoCE, ONES 3.0 creates an optimized environment for AI networks, allowing customers to confidently build and train next-generation AI models without worrying about data bottlenecks.

1. Comprehensive Interface and Performance Metrics

- 1. Track traffic patterns and identify congestion points.

- 2. Quickly detect and troubleshoot network anomalies, ensuring smooth data transmission.

2. RoCE Config Visualization

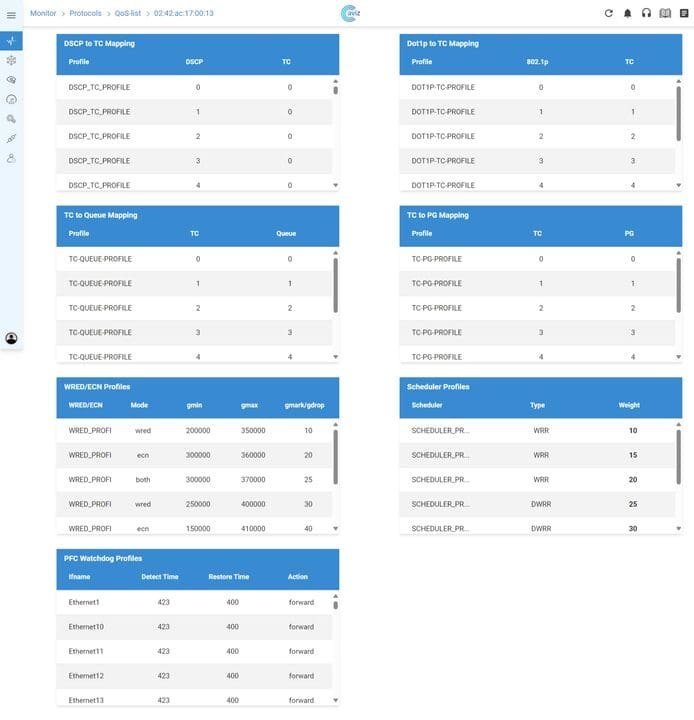

- 1. DSCP Mapping: Differentiated Service Code Point (DSCP) values are mapped to specific traffic queues, ensuring proper prioritization of packets.

- 2. 802.1p Mapping: Allows customers to map Layer 2 traffic to different queues based on priority, which helps in optimizing scheduling for time-sensitive traffic.

- 3. WRED and Scheduler Profiles: The Weighted Random Early Detection (WRED) profile and Scheduler profile work together to prevent congestion, ensuring that traffic is queued and forwarded efficiently, and that no packet is lost, which is critical for AI data pipelines.

- 4. PFC and PFC Watchdog: Priority Flow Control (PFC) is a cornerstone feature that allows customers to create lossless data paths for high-priority traffic, ensuring that important data is never dropped, even during network congestion. The PFC Watchdog monitors and ensures that flow control remains active, preventing data bottlenecks.

3. Visual Traffic Monitoring: A Data-Driven Experience

- 1. Transmit Packets: Keep an eye on both RoCE and normal traffic, and make sure that high-priority AI data packets are transmitted efficiently across the network.

- 2. PFC Counters: Get detailed insights into PFC activity, including inbound and outbound traffic, ensuring that flow control mechanisms are functioning as intended.

- 3. Queue Drop Counters: Understand where and when packet drops happen. By tracking packet discards, you can identify and address congestion issues, improving your network’s overall performance.

- 4. Congestion Notification Packets (CNP): RoCE relies heavily on lossless data transmission, and CNPs play a crucial role in signaling congestion. Monitoring CNP activity ensures that your network responds dynamically to traffic demands, minimizing packet loss and delay.

4. Flexible Time-Based Monitoring and Analysis

- 1. Analyze short-term network behavior for immediate troubleshooting.

- 2. Review long-term trends for capacity planning and optimization.

Centralized QoS View

- 1. Convenient UI Access: Eliminates the need for manual CLI commands by providing a UI-based platform to view all QoS configurations across switches, saving time and effort.

- 2. Real-Time Monitoring: Enables immediate detection and resolution of traffic issues with live updates on queue and profile status, reducing troubleshooting time.

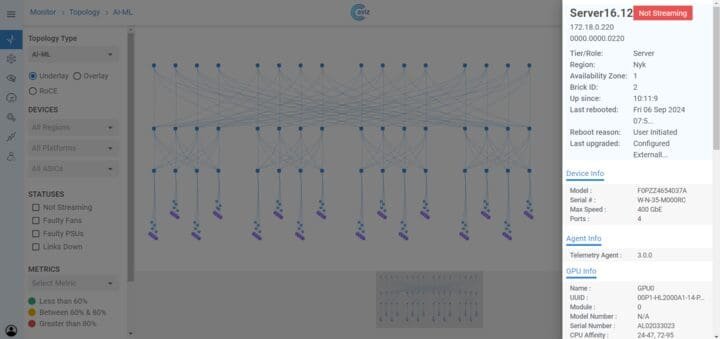

Comprehensive Topology View

- 1. Real-Time Device Status: Users can monitor individual devices, such as servers and switches, viewing critical details like availability, region, port speeds, and telemetry, helping to quickly detect and resolve issues like non-streaming devices.

- 2. Fault Detection: The interface highlights issues such as faulty fans, PSUs, or downed links, enabling swift corrective action to prevent network disruptions.

- 3. Detailed Device Information: Upon selecting a device, detailed metadata is displayed, including hardware specifics (e.g., GPU/CPU info), agent details, uptime, and port configuration, aiding in troubleshooting and performance assessment.

- 4. Link Details: When clicking on a link in the topology map, users can view all devices connected via that link, providing deeper insight into traffic paths and dependencies. This feature is crucial for diagnosing link-related issues and understanding how data flows between devices.

- 5. Traffic Monitoring: Analyze metrics like traffic load across links to detect bottlenecks and optimize traffic flows, ensuring smooth performance for high-priority workloads like AI/ML tasks.

- 6. Easy Navigation and Filtering: The ability to filter by topology type, device statuses, and regions simplifies the monitoring of large, complex networks, increasing management efficiency.

Proactive Monitoring and Alerts with the Enhanced ONES Rule Engine

The ONES Rule Engine has been a standout feature in previous releases, providing robust monitoring and alerting capabilities for network administrators. With the latest update, we’ve enhanced the usability and functionality, making rule creation and alert configuration even smoother and more intuitive. Whether monitoring RoCE metrics or AI-Fabric performance counters, administrators can now set up alerts with greater precision and ease. This new streamlined experience allows for better anomaly detection, helping prevent network congestion and data loss before they impact performance.

The ONES Rule Engine offers cutting-edge capabilities for proactive network management, enabling real-time anomaly detection and alerting. It provides deep visibility into AI-Fabric metrics like queue counters, PFC events, packet rates, and link failures, ensuring smooth performance for RoCE-based applications. By allowing users to set custom thresholds and conditions for congestion detection, the Rule Engine ensures that network administrators can swiftly address potential bottlenecks before they escalate.

With integrated alerting systems such as Slack and Zendesk, administrators can respond instantly to network anomalies. The ONES Rule Engine’s automation streamlines monitoring and troubleshooting, helping prevent data loss and maintain optimal network conditions, ultimately enhancing the overall network efficiency.